Open Source Radioloogy Platform Redesign – XNAT.org

From January to August I guided the redesign of XNAT. What’s XNAT? From the meta data pulled on Google: XNAT is an extensible open-source imaging informatics software platform dedicated to imaging-based research. It’s essentially a platform for radiologists and researchers to work on.

Working in healthIT for this long and still surprised. I thought it was a cool little piece of open source software, myself. Improving outcomes and facilitating research and progress all while maintaining a free access model. It was an attractive product in every aspect, so when the time came in the new year (this was January 2023 when it began) to begin a new project this was an easy choice.

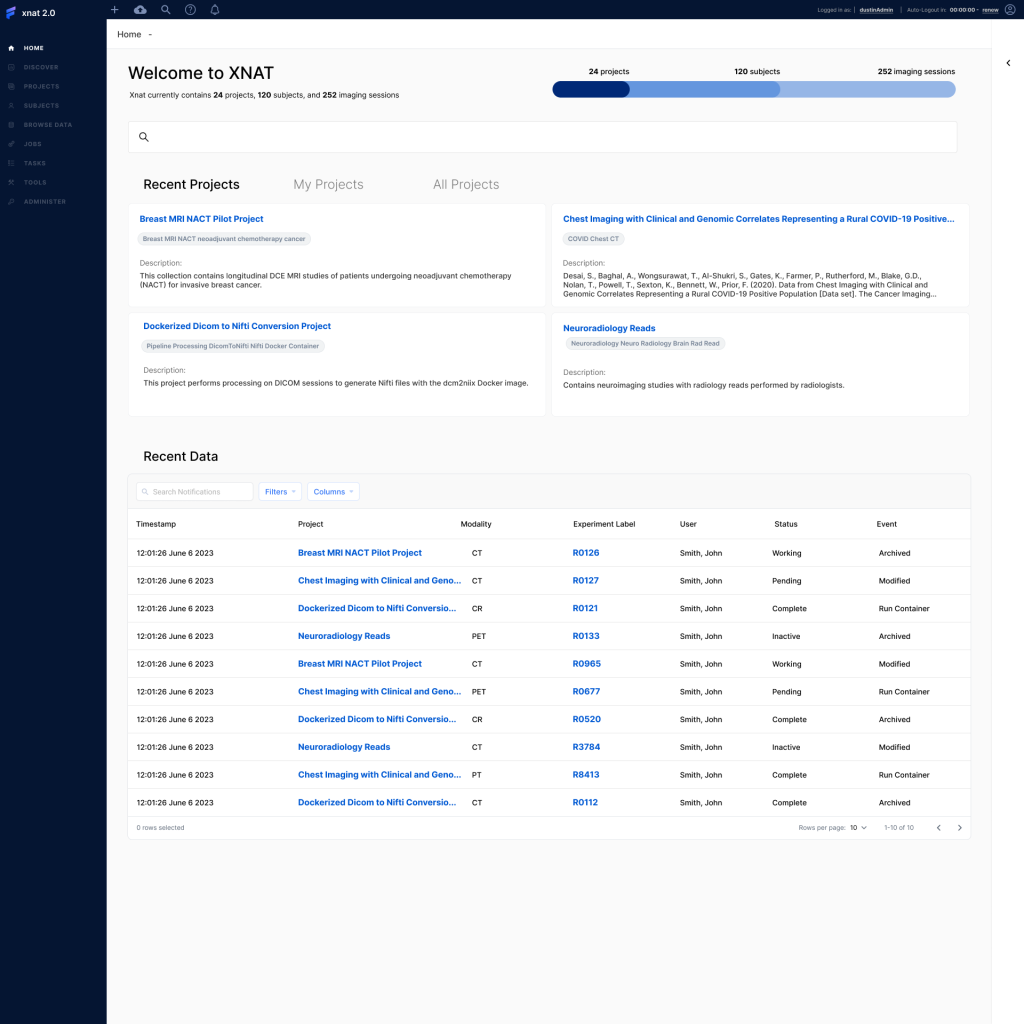

XNAT 2.0

That’s what what we started calling it. I didn’t really spend too much time on branding, just needed something to put in the top left as a temporary place holder for a logo to come. It was a big deal to the community to try and redesign an open source product that had evolved so independently of every iteration before it. You could just write a module, contribute it back to the XNAT world and that’s how it went. Each piece functioned independently of everything else which meant for a difficult experience for users.

The goal of this engagement was to redesign the front end of the software. This meant a discovery phase, lots of interviews and research. Working directly with some of the original authors of the platform itself and some of the most impressive engineering teams I’ve ever seen, we were able to define scenarios, the users in them, and create a figma prototype around specified objectives for our users.

Home

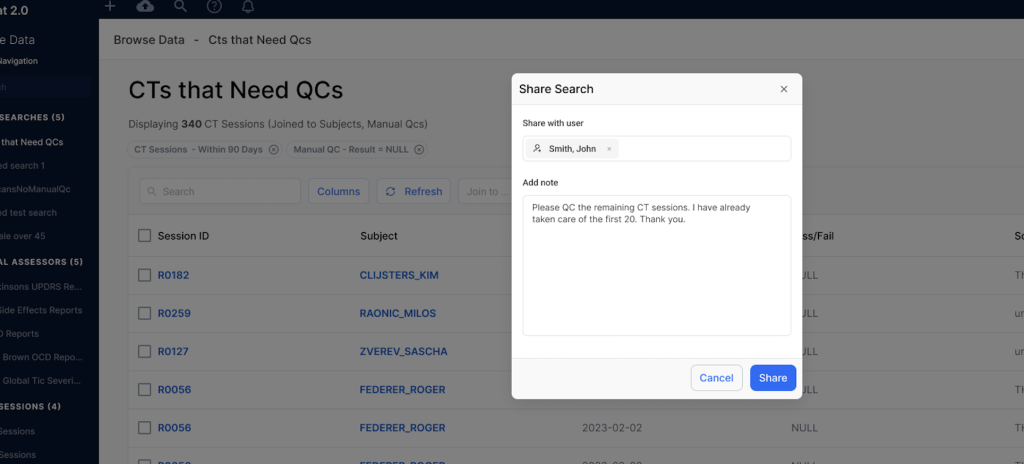

Let’s get to it – As is the case in most product design situations you have an established design system that needs to be followed. Working in rapid production environments requires you to utilize the existing components of visual libraries, that way you can stand up these high fidelity, production ready deliverables quickly. There was a lot of list building, sharing, task creation and delegation but it all took place in the viewer.

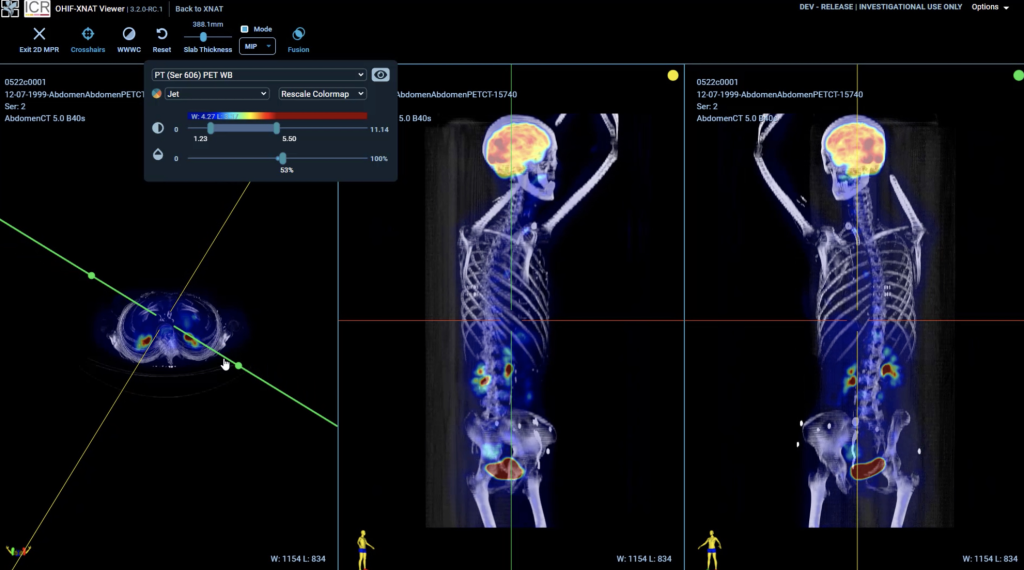

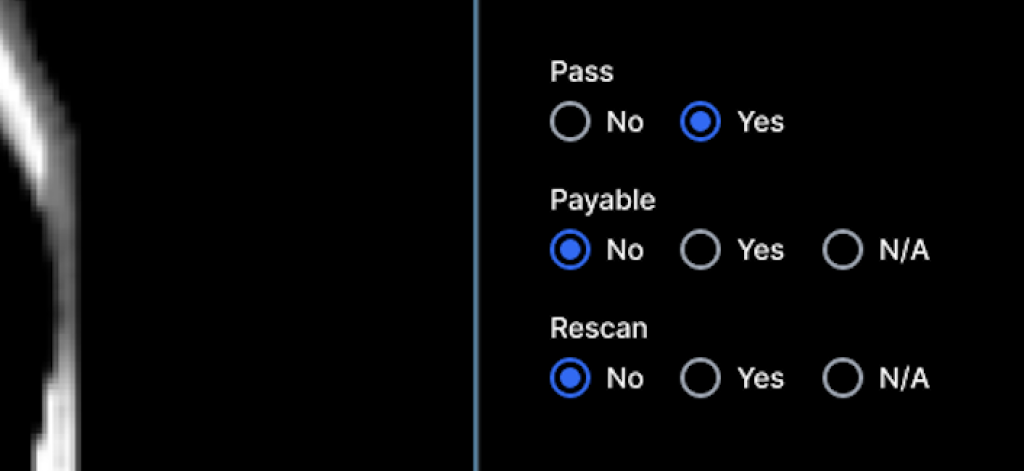

This is a screenshot from one of the prototypes, you can see behind the modal the user is looking at a list that says “CTs that Need QCs”. It’s a user created name for a “Saved Search” that been filtered and joined to other lists to finally produce one list that the user wanted to save its parameters. Inside the viewer itself, to perform the Quality Control

They are looking at the image and using their training/background to determine if the scan is usable. Meaning if it’s not cut off somewhere, a blinding light in the middle, (crazy things can happen in the output of the machines). What can also happen is, there can be burned in pixel data specifically around PHI (personal health information). The key to this research platform is protecting patient data and that means anonymity. If the persons name or any other identifying data is present in the image it has to be De-Identified (edited to cover it up or remove the pixels all together)

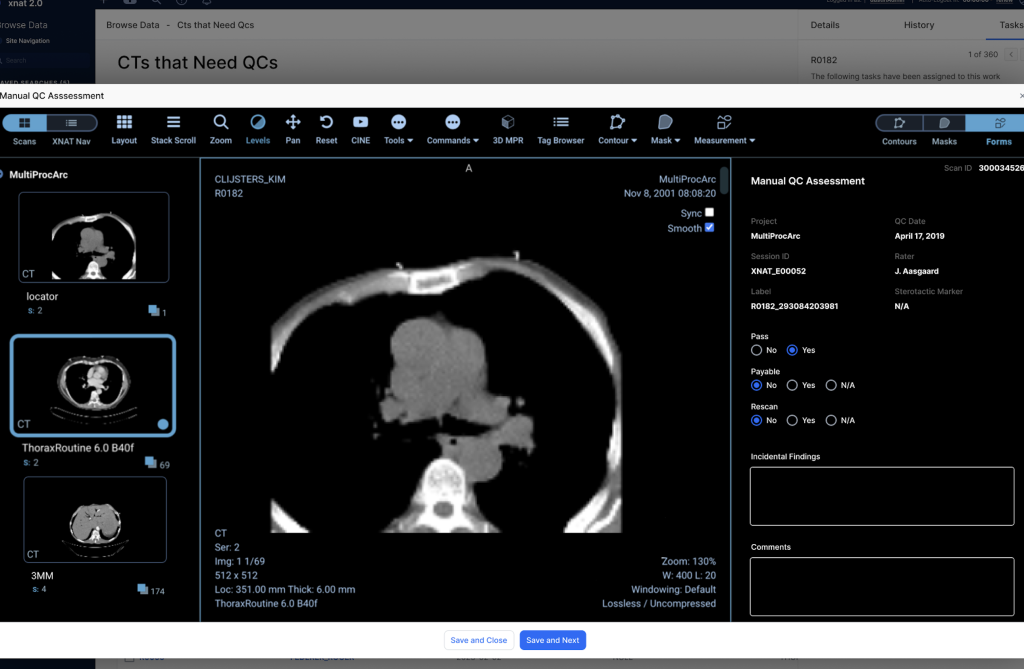

Task delegation/list sharing

After the user does as many as they can, often theres a team of people working on this because of the sheer amount of data being generated from hospital PACS. They’re helped with scripts and algorithms but there still needs to be some sort of final stamp of approval before being distributed for research.

Here’s an example of a radiologist finishing 20 Quality Control checks and asking a peer to take a look at the remaining on his work list. The mechanism of task delegation/creation was something added to the experience to create a sense of cohesion in the workflow.